#TAR EXTRACT XZ HOW TO#

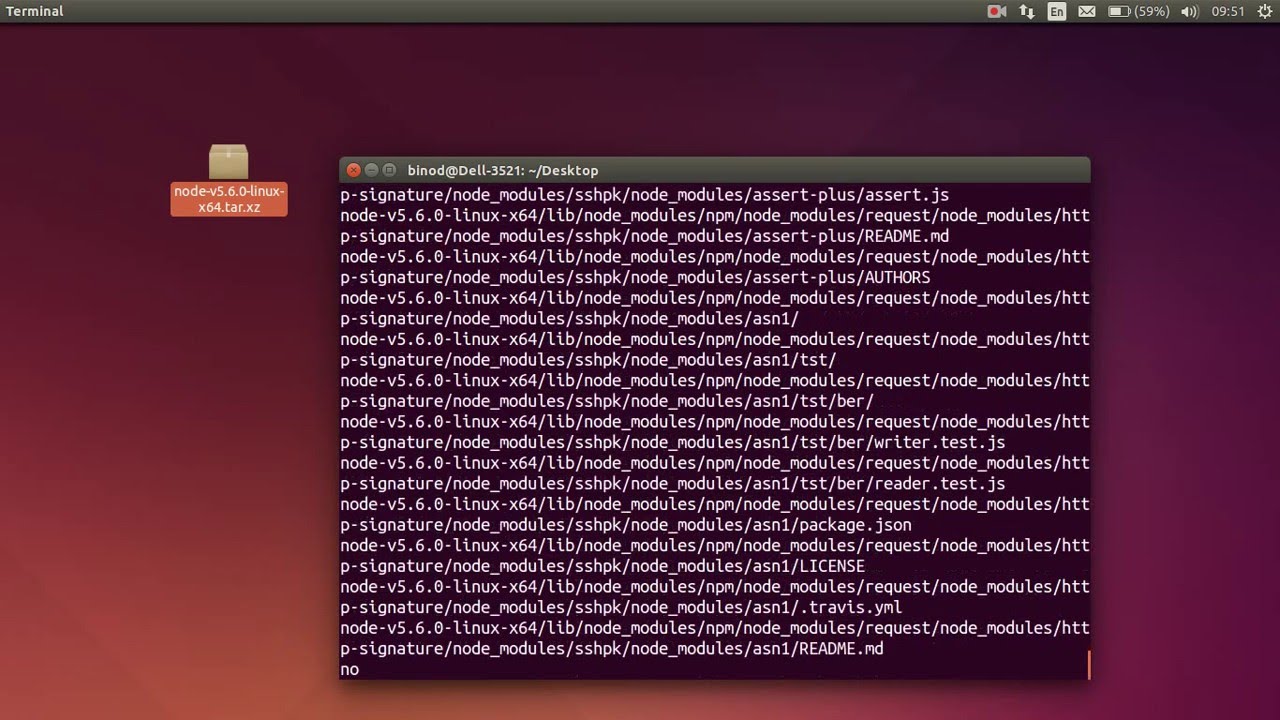

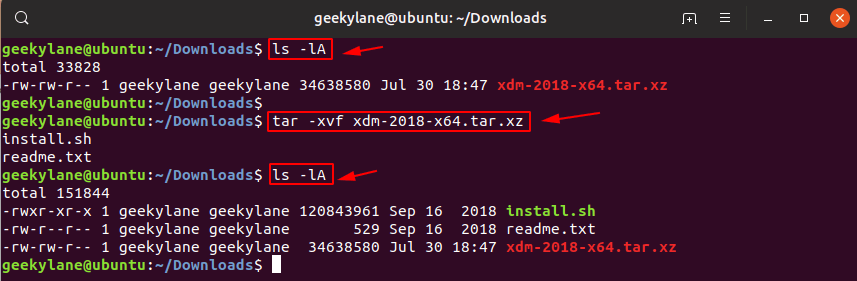

As mentioned before, XZ-utils are the main set of commands which you will be using, so let’s take a look at how to get this tool on your system.Ĭlosely follow these steps to successfully install XZ-utils on your system. Installing XZ-Utils on Your Linux System.īefore we discuss how to access Tar.xz files, you need to make sure that you have the main tool that you would need to fiddle around with them. If you are wondering how to deal with the Tar.xz files on your Linux system, you have come to the right place as we will be discussing how to uncompress data present on Tar.xz files with additional tutorials on how to perform the basic actions usually performed on Tar.xz files. To read and write Tar.xz files on Linux, there is the provision of the XZ-utils, which are a set of commands users can use to either compress or uncompress, read and write data on Tar.xz files.

It is dominantly used for compressing package files and kernel archives. However, Tar.xz is the prepotent compressed file type used in Linux and its distros. It is generally used by IT specialists who have to keep track of multiple variables and are familiar with the functions of Tar.xz. Tar.xz is not regularly used because it is comparatively complex.

#TAR EXTRACT XZ RAR#

It is similar to other compressed file types such as RAR or ZIP but is better and more efficient in data organization.

#TAR EXTRACT XZ FULL#

With two consecutive buffers, the pipe could keep downloading and extracting for a while in the background.īut without a buffer, the whole pipe would immediately slow down at once, and would rarely ever reach full speed is a compressed data file type capable of storing data of multiple types and from various applications. Even if it didn't, without a buffer the whole pipe is sensitive to slowdowns.įor example, when flashing directly from the internet, I found that some USB devices occasionally did a flush or something, and that causes a periodic slowdown.

I'm pretty sure that a simple '>' would do it one bit at a time. What if you dont even use dd, just `wget. (and when you want to have the world's fastest img downloader, every second counts.)Īdditionally, in my script I go a couple steps further by loading the first 100MB of the img into cache first, and also it uses the buffer package to overcome I/O bottlenecks for maximum efficiency. It usually cut down on download time by around 3 seconds. In my tests, I found it gives a bit of a buffer. The cp command (which is very fast in my tests) uses just 128KB block size (see strace cp. Just for my interest, why are you using "bs=10M" for the dd command? Now I just use "cp" - its simple to use and fast enough in practice (slightly faster than dd bs=1m)

I gave up trying to calculate everything and produce an optimal C program! So, given that the disk read times are near zero for this case only, whats the best read/write block size to give dd for the optimal overlap between reads and writes? įor example, I usually download the image to a memory disk, then uncompress it and copy it to the raw device.

Given all the above variables, any simple benchmark results will be meaningless. Its incredibly complicated given: different read/download speeds, decompression speeds, indeterminate overlap between reads and writes, disk write speeds, variable asynchronous disk block prefetch ranges, "sync" time, verification time, etc etc Believe it or not, with enough optimization, it can be faster to download an img, extract it, and flash it to a SD card, than to flash a local, pre-extracted.

0 kommentar(er)

0 kommentar(er)